Object Classification Model: ResNet50

This document demonstrates how to run the ResNet50 object classification model on Allwinner T527/A733 series chips.

This example uses the pre-trained ONNX format model from resnet50-v2-7.onnx as an example to demonstrate the complete process of converting the model for inference on the board.

The deployment of ResNet50 on the board requires two steps

- On the PC, use the ACUITY Toolkit to convert models from different frameworks into NBG format.

- On the board, use the awnn API to infer the model.

Download ai-sdk Example Repository

git clone https://github.com/ZIFENG278/ai-sdk.git

PC Model Conversion

Radxa provides a pre-converted resnet50.nb model. Users can directly refer to Board-side inference ResNet50 and skip the PC model conversion section.

-

Enter the ACUITY Toolkit Docker container

ACUTIY Toolkit Docker environment preparation please refer to ACUITY Toolkit environment configuration

Configure environment variables

X86 Linux PCcd ai-sdk/models

source env.sh v3 #NPU_VERSIONA733 select

v3, T527 selectv2tipNPU version selection please refer to NPU version comparison table

-

Download resnet50 onnx model

X86 Linux PCmkdir resnet50-sim && cd resnet50-sim

wget https://github.com/onnx/models/raw/refs/heads/main/validated/vision/classification/resnet/model/resnet50-v2-7.onnx -O resnet50.onnx -

Fixed input

Use NPU inference only accepts fixed input size, here use onnxsim to fix input size

X86 Linux PCpip3 install onnxsim onnxruntime

onnxsim resnet50.onnx resnet50-sim.onnx --overwrite-input-shape 1,3,224,224 -

Make quantization calibration set

Use an appropriate amount of images as a quantitative calibration set, and save the quantized images in the form of image paths in

dataset.txtX86 Linux PCvim dataset.txt./space_shuttle_224x224.jpg

-

Make model input output file

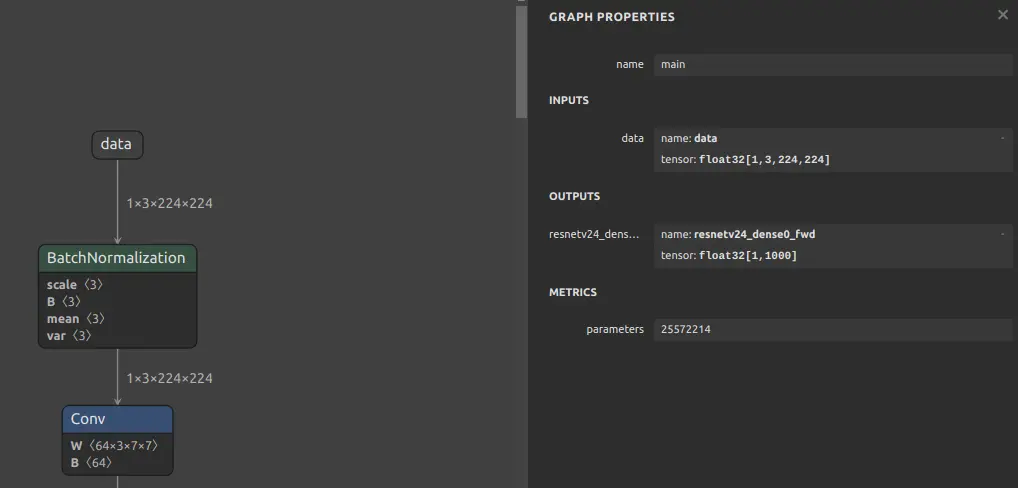

You can use netron to confirm the input and output names of the onnx model

X86 Linux PCvim inputs_outputs.txt--inputs data --input-size-list '3,224,224' --outputs 'resnetv24_dense0_fwd'

resnet50 in/output name

-

Directory contains files

.

|-- dataset.txt

|-- inputs_outputs.txt

|-- resnet50-sim.onnx

|-- resnet50.onnx

`-- space_shuttle_224x224.jpg -

Parse model

tippegasus script is in ai-sdk/scripts, you can copy it to models directory

Use

pegasus_import.shto parse the model into an intermediate representation (IR). This generatesresnet50-sim.json(model structure) andresnet50-sim.data(model weights).X86 Linux PC./pegasus_import.sh resnet50-sim/ -

Modify resnet50-sim_inputmeta.yml file

Because the training dataset is ImageNet, here the ImageNet training set's normalized mean is [0.485, 0.456, 0.406], std is [0.229, 0.224, 0.225], here needs to perform reverse normalization calculation. Normalization data reference pytorch documentation

# mean

0.485 * 255 = 123.675

0.456 * 255 = 116.28

0.406 * 255 = 103.53# scale

1 / (0.229 * 255) = 0.01712

1 / (0.224 * 255) = 0.01751

1 / (0.225 * 255) = 0.01743Here, based on the calculated mean and scale, modify the mean and scale values in

resnet50-sim_inputmeta.yml:mean:

- 123.675

- 116.28

- 103.53

scale:

- 0.01712

- 0.01751

- 0.01743input_meta:

databases:

- path: dataset.txt

type: TEXT

ports:

- lid: data_142

category: image

dtype: float32

sparse: false

tensor_name:

layout: nchw

shape:

- 1

- 3

- 224

- 224

fitting: scale

preprocess:

reverse_channel: true

mean:

- 123.675

- 116.28

- 103.53

scale:

- 0.01712

- 0.01751

- 0.01743

preproc_node_params:

add_preproc_node: false

preproc_type: IMAGE_RGB

# preproc_dtype_converter:

# quantizer: asymmetric_affine

# qtype: uint8

# scale: 1.0

# zero_point: 0

preproc_image_size:

- 224

- 224

preproc_crop:

enable_preproc_crop: false

crop_rect:

- 0

- 0

- 224

- 224

preproc_perm:

- 0

- 1

- 2

- 3

redirect_to_output: false -

Quantize model

Use

pegasus_quantize.shto quantize the model into uint8 typeX86 Linux PC./pegasus_quantize.sh resnet50-sim/ uint8 10 -

Compile model

Use

./pegasus_export_ovx.shto compile the model into NBG model formatX86 Linux PC./pegasus_export_ovx.sh resnet50-sim/ uint8NBG model saved in

resnet50-sim/wksp/resnet50-sim_uint8_nbg_unify/network_binary.nb

Board-side inference ResNet50

Enter the resnet50 example code file directory path

cd ai-sdk/examples/resnet50

Compile example

make AI_SDK_PLATFORM=a733

make install AI_SDK_PLATFORM=a733 INSTALL_PREFIX=./

Parameter parsing:

AI_SDK_PLATFORM: Specify SoC, optional a733, t527

INSTALL_PREFIX: Specify installation path

Run example

Import environment variables

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/rock/ai-sdk/viplite-tina/lib/aarch64-none-linux-gnu/NPU_VERSION # NPU_SW_VERSION

Specify NPU_SW_VERSION, A733 select v2.0, T527 select v1.13, NPU version对照请参考 NPU 版本对照表

Enter the example installation directory

cd INSTALL_PREFIX/etc/npu/resnet50

# ./resnet50 nbg_model input_picture

./resnet50 model/resnet50.nb ./input_data/dog_224_224.jpg

The example will automatically install the radxa provided resnet50.nb model, here you can manually specify the NBG model path converted by the user.

resnet50 demo input image

(.venv) rock@radxa-cubie-a7a:~/ai-sdk/examples/resnet50/etc/npu/resnet50$ ./resnet50 ./model/network_binary.nb ./input_data/dog_224_224.jpg

./resnet50 nbg input

VIPLite driver software version 2.0.3.2-AW-2024-08-30

viplite init OK.

VIPLite driver version=0x00020003...

VIP cid=0x1000003b, device_count=1

* device[0] core_count=1

awnn_init total: 4.47 ms.

vip_create_network ./model/network_binary.nb: 13.10 ms.

input 0 dim 224 224 3 1, data_format=2, name=input/output[0], elements=1833508979, scale=0.018657, zero_point=113

create input buffer 0: 150528

output 0 dim 1000 1, data_format=2, name=uid_1_out_0, elements=1000, scale=0.131327, zero_point=44

create output buffer 0: 1000

memory pool size=1606656 bytes

load_param ./model/network_binary.nb: 0.19 ms.

prepare network ./model/network_binary.nb: 2.58 ms.

set network io ./model/network_binary.nb: 0.01 ms.

awnn_create total: 15.93 ms.

get jpeg success.

trans data success.

memcpy(0xffff96348000, 0xffff96162010, 150528) load_input_data: 0.04 ms.

vip_flush_buffer input: 0.01 ms.

awnn_set_input_buffers total: 0.06 ms.

awnn_set_input_buffers success.

vip_run_network: 8.30 ms.

vip_flush_buffer output: 0.01 ms.

int8/uint8 1000 memcpy: 0.00 ms.

tensor to fp: 0.02 ms.

awnn_run total: 8.35 ms.

awnn_run success.

class_postprocess.cpp run.

========== top5 ==========

class id: 231, prob: 13.395374, label: collie

class id: 230, prob: 12.082102, label: Shetland sheepdog, Shetland sheep dog, Shetland

class id: 169, prob: 10.900157, label: borzoi, Russian wolfhound

class id: 160, prob: 8.930249, label: Afghan hound, Afghan

class id: 224, prob: 7.222996, label: groenendael

class_postprocess success.

awnn_destroy total: 1.47 ms.

awnn_uninit total: 0.70 ms.