NPU Development Guide

The Dragon series products from Renesas feature SoCs equipped with Qualcomm® Hexagon™ Processor (NPU), which is specifically designed as a hardware accelerator for AI inference. To perform model inference using the NPU, the QAIRT (Qualcomm® AI Runtime) SDK is required for model porting of pre-trained models. Qualcomm® provides a series of SDKs for NPU developers to facilitate the porting of their AI models to the NPU.

-

Model Quantization Library: AIMET

-

Model Porting SDK: QAIRT

-

Model Application Library: QAI-APP-BUILDER

Qualcomm® NPU Software Stack

QAIRT

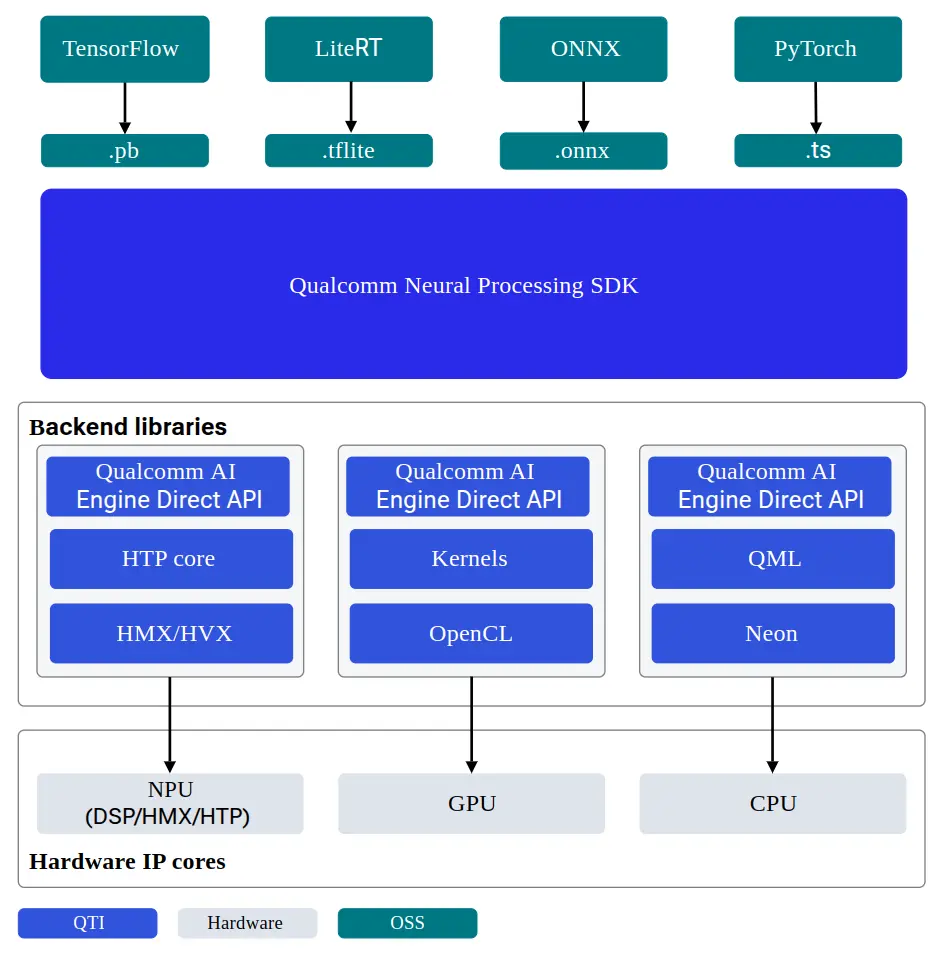

QAIRT (Qualcomm® AI Runtime) SDK is a software package that integrates Qualcomm® AI software products, including Qualcomm® AI Engine Direct, Qualcomm® Neural Processing SDK, and Qualcomm® Genie. QAIRT provides developers with all the necessary tools for porting and deploying AI models on Qualcomm® hardware accelerators, as well as the runtime for running models on CPU, GPU, and NPU.

Supported Inference Backends

-

CPU

-

GPU

-

NPU

QAIRT SDK Architecture

QAIRT Model Formats

QAIRT supports the following 3 model file formats based on different systems and inference backends:

| Format | Backend | Cross-OS | Cross-Chip |

|---|---|---|---|

| Library | CPU / GPU / NPU | No | Yes |

| DLC | CPU / GPU / NPU | Yes | Yes |

| Context Binary | NPU | Yes | No |

This documentation focuses on model porting and deployment using the NPU, specifically covering the conversion and inference methods for the Context-Binary format, which offers optimal memory usage and performance. For information on converting other model formats and inference methods for different backends, please refer to the QAIRT SDK Documentation

SoC Architecture Reference Table

| SoC | dsp_arch | soc_id |

|---|---|---|

| QCS6490 | v68 | 35 |

Documentation

AIMET

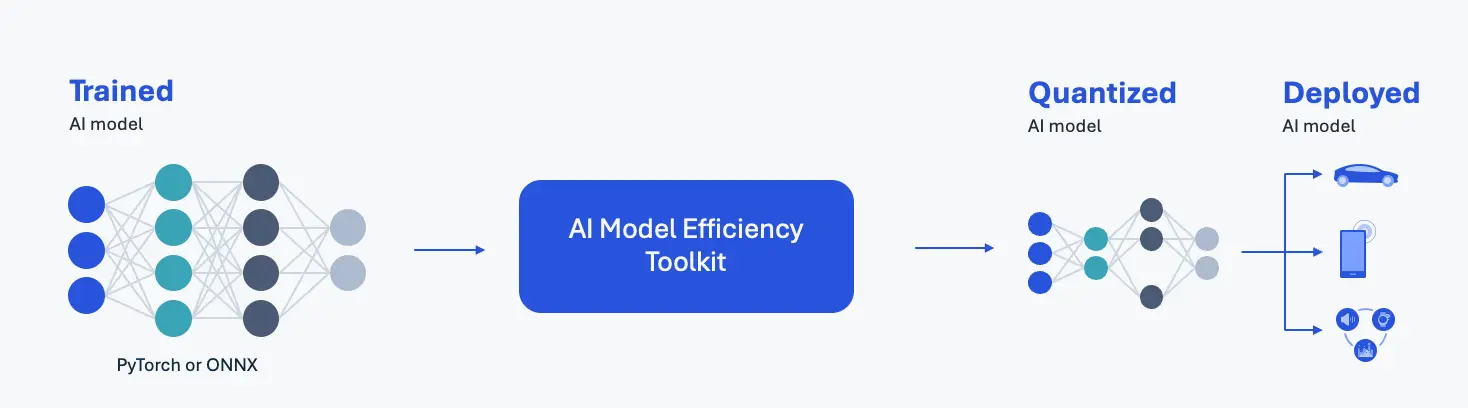

AIMET (AI Model Efficiency Toolkit) is a quantization tool for deep learning models (such as PyTorch and ONNX). AIMET improves the runtime performance of deep learning models by reducing computational load and memory usage. With AIMET, developers can quickly iterate to find the optimal quantization configuration that balances accuracy and latency. The quantized models exported from AIMET can be compiled and deployed on Qualcomm NPU using QAIRT, or run directly with ONNX-Runtime.

AIMET OVERVIEW

Documentation

QAI-APPBUILDER

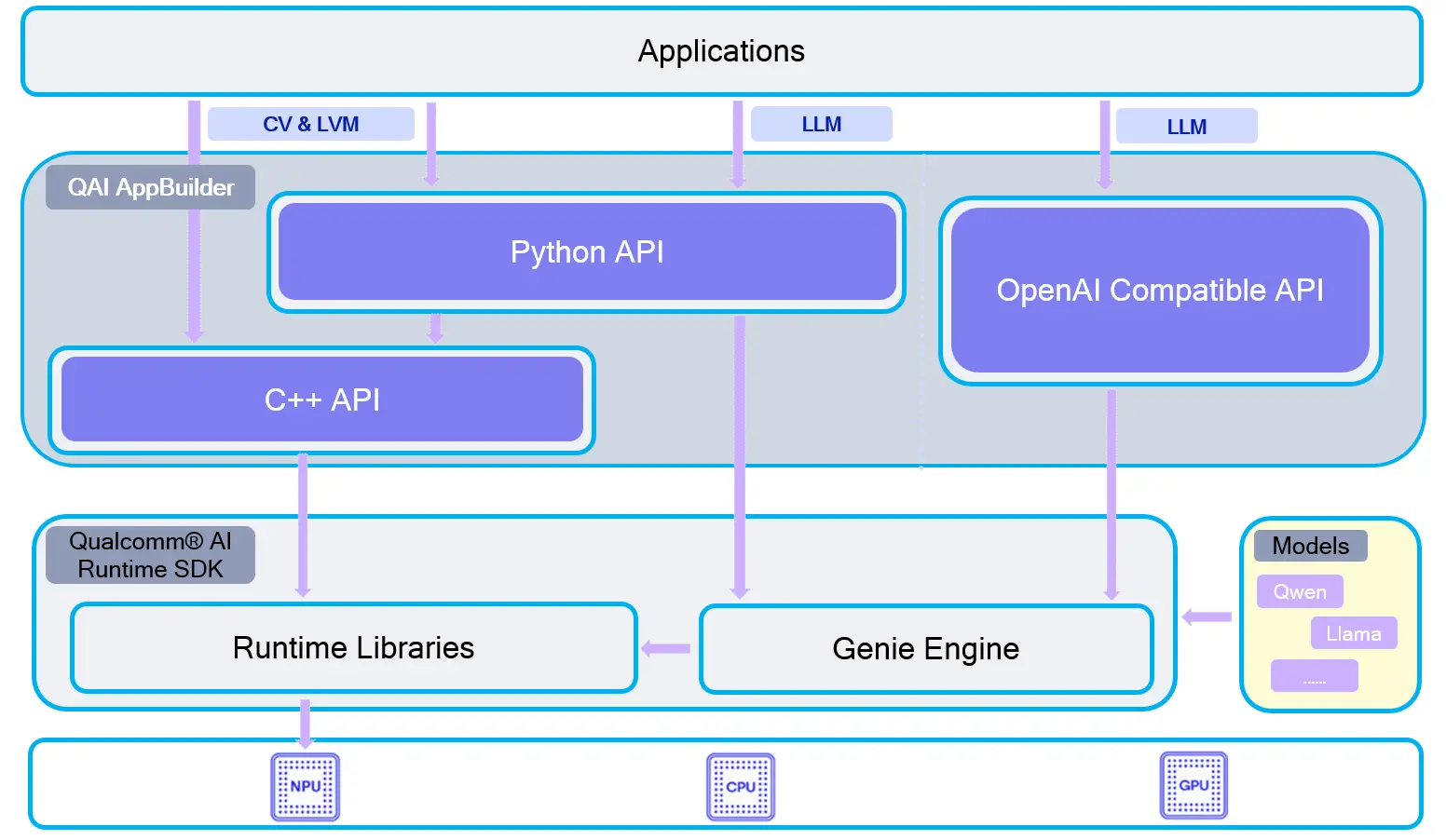

Quick AI Application Builder (QAI AppBuilder) helps developers easily deploy AI models and design AI applications on Qualcomm® SoC platforms equipped with Qualcomm® Hexagon™ Processor (NPU) using the Qualcomm® AI Runtime SDK. It encapsulates the model deployment APIs into a set of simplified interfaces for loading models onto the NPU and performing inference. QAI AppBuilder significantly reduces the complexity of model deployment for developers and provides multiple demos as references for designing custom AI applications.

QAI-APPBUILDER Architecture