YOLOv5-det 目标检测

-

进入 Radxa Model-zoo YOLOv5 目录

cd Radxa-Model-Zoo/sample/YOLOv5 -

下载模型,可选 F32/F16/INT8 量化模型

# F32

wget https://github.com/radxa-edge/TPU-Edge-AI/releases/download/model-zoo/yolov5s_v6.1_3output_fp32_1b.bmodel

# F16

wget https://github.com/radxa-edge/TPU-Edge-AI/releases/download/model-zoo/yolov5s_v6.1_3output_fp16_1b.bmodel

# INT8

wget https://github.com/radxa-edge/TPU-Edge-AI/releases/download/model-zoo/yolov5s_v6.1_3output_int8_1b.bmodel -

下载测试图片到数据文件夹

mkdir images && cd images

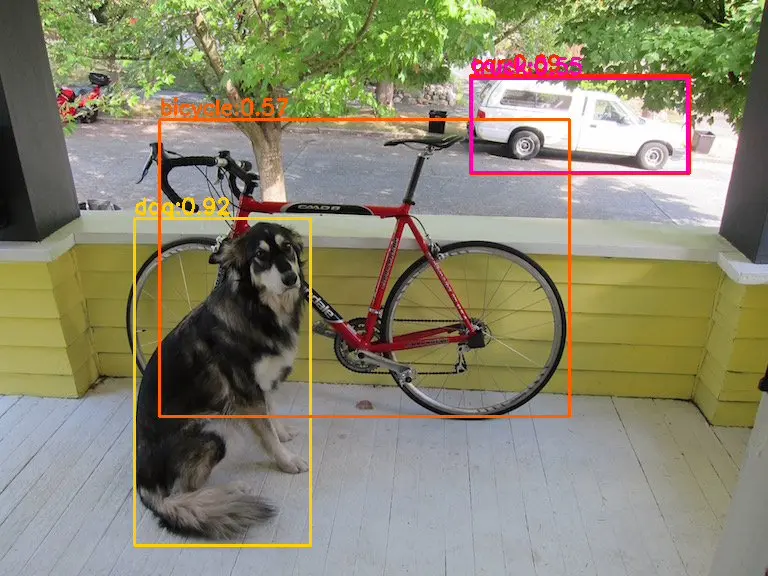

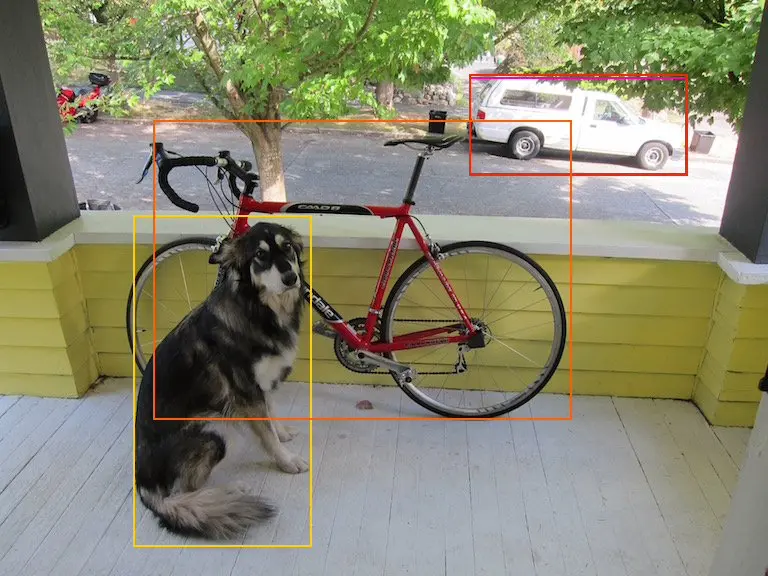

wget https://github.com/radxa-edge/TPU-Edge-AI/releases/download/model-zoo/dog_bike_car.jpg -

创建虚拟环境

必须创建虚拟环境,否则可能会影响其他应用的正常运行, 虚拟环境使用请参考这里

python3 -m virtualenv .venv

source .venv/bin/activate -

安装 python 依赖包

pip3 install --upgrade pip

pip3 install numpy

pip3 install https://github.com/radxa-edge/TPU-Edge-AI/releases/download/v0.1.0/sophon_arm-3.7.0-py3-none-any.whl -

运行 python 示例

python 目录下提供了一系列Python例程,具体情况如下:

序号 Python例程 说明 1 yolov5_opencv.py 使用OpenCV解码、OpenCV前处理、SAIL推理 2 yolov5_bmcv.py 使用SAIL解码、BMCV前处理、SAIL推理 -

运行 yolov5_opencv.py

export LD_LIBRARY_PATH=/opt/sophon/libsophon-current/lib:$LD_LIBRARY_PATH

export PYTHONPATH=$PYTHONPATH:/opt/sophon/sophon-opencv-latest/opencv-python/

python3 python/yolov5_opencv.py --input ./images --bmodel ./yolov5s_v6.1_3output_fp16_1b.bmodel参数说明

yolov5_opencv.py [--input IMG_PATH] [--bmodel BMODEL]

--input: 推理图片路径,可输入整个图片文件夹的路径或视频路径--bmodel: 用于推理的 bmodel 路径(.venv) linaro@bm1684:/data/ssd/docs_check/Radxa-Model-Zoo/sample/YOLOv5$ python3 python/yolov5_opencv.py --input ./images --bmodel ./yolov5s_v6.1_3output_fp16_1b.bmodel

[BMRT][bmcpu_setup:406] INFO:cpu_lib 'libcpuop.so' is loaded.

bmcpu init: skip cpu_user_defined

open usercpu.so, init user_cpu_init

[BMRT][load_bmodel:1084] INFO:Loading bmodel from [./yolov5s_v6.1_3output_fp16_1b.bmodel]. Thanks for your patience...

[BMRT][load_bmodel:1023] INFO:pre net num: 0, load net num: 1

INFO:root:load ./yolov5s_v6.1_3output_fp16_1b.bmodel success!

INFO:root:1, img_file: ./images/dog_bike_car.jpg

sampleFactor=6, cinfo->num_components=3 (1x2, 1x1, 1x1)

Open /dev/jpu successfully, device index = 0, jpu fd = 24, vpp fd = 25

INFO:root:result saved in ./results/yolov5s_v6.1_3output_fp16_1b.bmodel_images_opencv_python_result.json

INFO:root:------------------ Predict Time Info ----------------------

INFO:root:decode_time(ms): 16.56

INFO:root:preprocess_time(ms): 31.80

INFO:root:inference_time(ms): 20.42

INFO:root:postprocess_time(ms): 150.16

all done.运行结果会保存在

./results/yolov5s_v6.1_3output_fp16_1b.bmodel_images_opencv_python_result.json图片结果保存在

./result/images

-

运行 yolov5_bmcv.py

export LD_LIBRARY_PATH=/opt/sophon/libsophon-current/lib:$LD_LIBRARY_PATH

export PYTHONPATH=$PYTHONPATH:/opt/sophon/sophon-opencv-latest/opencv-python/

python3 python/yolov5_bmcv.py --input ./images --bmodel ./yolov5s_v6.1_3output_fp16_1b.bmodel参数说明

yolov5_bmcv.py [--input IMG_PATH] [--bmodel BMODEL]

--input: 推理图片路径,可输入整个图片文件夹的路径或视频路径--bmodel: 用于推理的 bmodel 路径(.venv) linaro@bm1684:/data/ssd/docs_check/Radxa-Model-Zoo/sample/YOLOv5$ python3 python/yolov5_bmcv.py --input ./images --bmodel ./yolov5s_v6.1_3output_fp16_1b.bmodel

[BMRT][bmcpu_setup:406] INFO:cpu_lib 'libcpuop.so' is loaded.

bmcpu init: skip cpu_user_defined

open usercpu.so, init user_cpu_init

[BMRT][load_bmodel:1084] INFO:Loading bmodel from [./yolov5s_v6.1_3output_fp16_1b.bmodel]. Thanks for your patience...

[BMRT][load_bmodel:1023] INFO:pre net num: 0, load net num: 1

INFO:root:1, img_file: ./images/dog_bike_car.jpg

sampleFactor=6, cinfo->num_components=3 (1x2, 1x1, 1x1)

Open /dev/jpu successfully, device index = 0, jpu fd = 43, vpp fd = 44

INFO:root:result saved in ./results/yolov5s_v6.1_3output_fp16_1b.bmodel_images_bmcv_python_result.json

INFO:root:------------------ Predict Time Info ----------------------

INFO:root:decode_time(ms): 25.40

INFO:root:preprocess_time(ms): 2.68

INFO:root:inference_time(ms): 17.16

INFO:root:postprocess_time(ms): 153.20

all done.运行结果会保存在

./results/yolov5s_v6.1_3output_fp16_1b.bmodel_images_bmcv_python_result.json图片结果保存在

./result/images

bmcv例程暂时没有在图片上标出类别标签

-

FAQ

更多有关模型转换,模型量化细节, 模型精度测试,请参考