DeepSeek-R1 TPU

DeepSeek-R1 is developed by Hangzhou DeepSeek.

This model is fully open-source, including all training techniques and model weights, achieving performance on par with the closed-source OpenAI-o1.

DeepSeek distilled six smaller models from DeepSeek-R1 for the open-source community, including Qwen2.5 and Llama3.1.

This document explains how to use TPU-MLIR to deploy the distilled DeepSeek-R1 models DeepSeek-R1-Distill-Qwen-1.5B and DeepSeek-R1-Distill-Qwen-7B on Airbox for hardware-accelerated inference using TPU.

Deployment of DeepSeek-R1 Qwen2.5 Distilled Model

-

Clone the repository

git clone https://github.com/zifeng-radxa/LLM-TPU.git -

Open the DeepSeek-R1 project directory

cd LLM-TPU/models/language_model/python_demo -

This example provides INT4 quantized versions of deepseek-r1-distill-qwen-1.5b and deepseek-r1-distill-qwen-7b along with precompiled cpython dynamic libraries for download.

Users can refer to DeepSeek-R1 Model Conversion to convert different quantization versions of the DeepSeek-R1 distilled model.

Users can also refer to DeepSeek-R1 cpython Dynamic Library Compilation to compile their own cpython interface binding files.

Download and compile the model using modelscope

- Install modelscope via pip3

pip3 install modelscope - Clone the deepseek-r1-distill-qwen-1-5b_TPU repository

modelscope download --model radxa/deepseek-r1-distill-qwen-1-5b_TPU - Clone the deepseek-r1-distill-qwen-7b_TPU repository

modelscope download --model radxa/deepseek-r1-distill-qwen-7b_TPU

- Install modelscope via pip3

-

Set up the environment

It is recommended to create a virtual environment to avoid conflicts with other applications. See here for virtual environment usage.

python3 -m virtualenv .venv

source .venv/bin/activate -

Install dependencies

pip3 install --upgrade pip

pip3 install transformers==4.45.1 Jinja2==3.1.5 -

Set environment variables

Use the

lddcommand to check whetherchat.cpython-38-aarch64-linux-gnu.solinks tolibbmlib.soinLLM-TPU/support/lib_soc/lib*.If the link is incorrect, run the following command, making sure to specify the correct

LLM-TPUpath.export LD_LIBRARY_PATH=LLM-TPU/support/lib_soc:$LD_LIBRARY_PATH

cp deepseek-r1-distill-qwen-1-5b_TPU/chat.cpython-38-aarch64-linux-gnu.so .

ldd chat.cpython-38-aarch64-linux-gnu.so -

Start DeepSeek-R1

Terminal mode

python3 pipeline.py --model_path ./deepseek-r1-distill-qwen-1-5b_TPU/qwen2.5-1.5b_int4_seq8192_1dev.bmodel --devid 0 --dir_path ./deepseek-r1-distill-qwen-1-5b_TPU-m; --model_path: Specify model path-d; --device: Specify TPU device number, default is 0-p; --dir_path: Specify the directory containing the tokenizer folder

DeepSeek-R1 Model Conversion

Users can refer to this section to convert Qwen2-7B-Instruct models into bmodel with different quantization types.

-

Prepare the environment on an x86 workstation

See TPU-MLIR Installation for setting up TPU-MLIR.

Clone the repository

git clone https://github.com/zifeng-radxa/LLM-TPU.git -

Download DeepSeek-R1 open-source distilled models from Huggingface

tipEnsure that git lfs is installed.

- DeepSeek-R1-Distill-Qwen-1.5B

git clone https://huggingface.co/deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B - DeepSeek-R1-Distill-Qwen-7B

git clone https://huggingface.co/deepseek-ai/DeepSeek-R1-Distill-Qwen-7B

- DeepSeek-R1-Distill-Qwen-1.5B

-

Create a virtual environment in

LLM-TPU/models/Qwen2_5python3 -m virtualenv .venv

source .venv/bin/activate

pip3 install --upgrade pip

pip install transformers_stream_generator einops tiktoken accelerate onnx torch==2.0.1 torchvision==0.15.2 transformers==4.45.2 -

Align the model environment

-

DeepSeek-R1-Distill-Qwen-1.5B

cp ./compile/files/Qwen2.5-1.5B-Instruct/modeling_qwen2.py .venv/lib/python3.10/site-packages/transformers/models/qwen2 -

DeepSeek-R1-Distill-Qwen-7B

cp ./compile/files/Qwen2.5-7B-Instruct/modeling_qwen2.py .venv/lib/python3.10/site-packages/transformers/models/qwen2

-

-

Export ONNX file

Here we take 8192 length as an example

cd compile

python3 export_onnx.py --model_path your_torch_model --seq_length 8192 --device cpu--model_path: The downloaded DeepSeek-R1-Distill-Qwen folder path--seq_length: Fixed exported sequence length, optional lengths of 512, 1024, 2048, 8192, etc. -

Convert to bmodel file

- DeepSeek-R1-Distill-Qwen-1.5B

./compile.sh --mode int4 --name qwen2.5-1.5b --addr_mode io_alone --seq_length 8192- DeepSeek-R1-Distill-Qwen-7B

./compile.sh --mode int4 --name qwen2.5-7b --addr_mode io_alone --seq_length 2048--mode: quantization mode, optional int4, int8--seq_length: sequence length, must be the same as the seq_length specified when generating the onnx file--name: model name, optional qwen2.5-1.5b and qwen2-7b--addr_mode: record the address allocation mode of the network, here select io_alone modetipGenerating bmodel takes about 1 hour or more. It is recommended to have 64G memory and more than 100G hard disk space, otherwise it is likely to OOM or no space left

DeepSeek-R1 cpython Dynamic Library Compilation

Precompiled files are already included in the download package. If you download them through modelscope, you don’t need to compile them.

pip3 install pybind11[global]

cmake -B build && cmake --build build

cp build/*cpython* .

Inference Performance

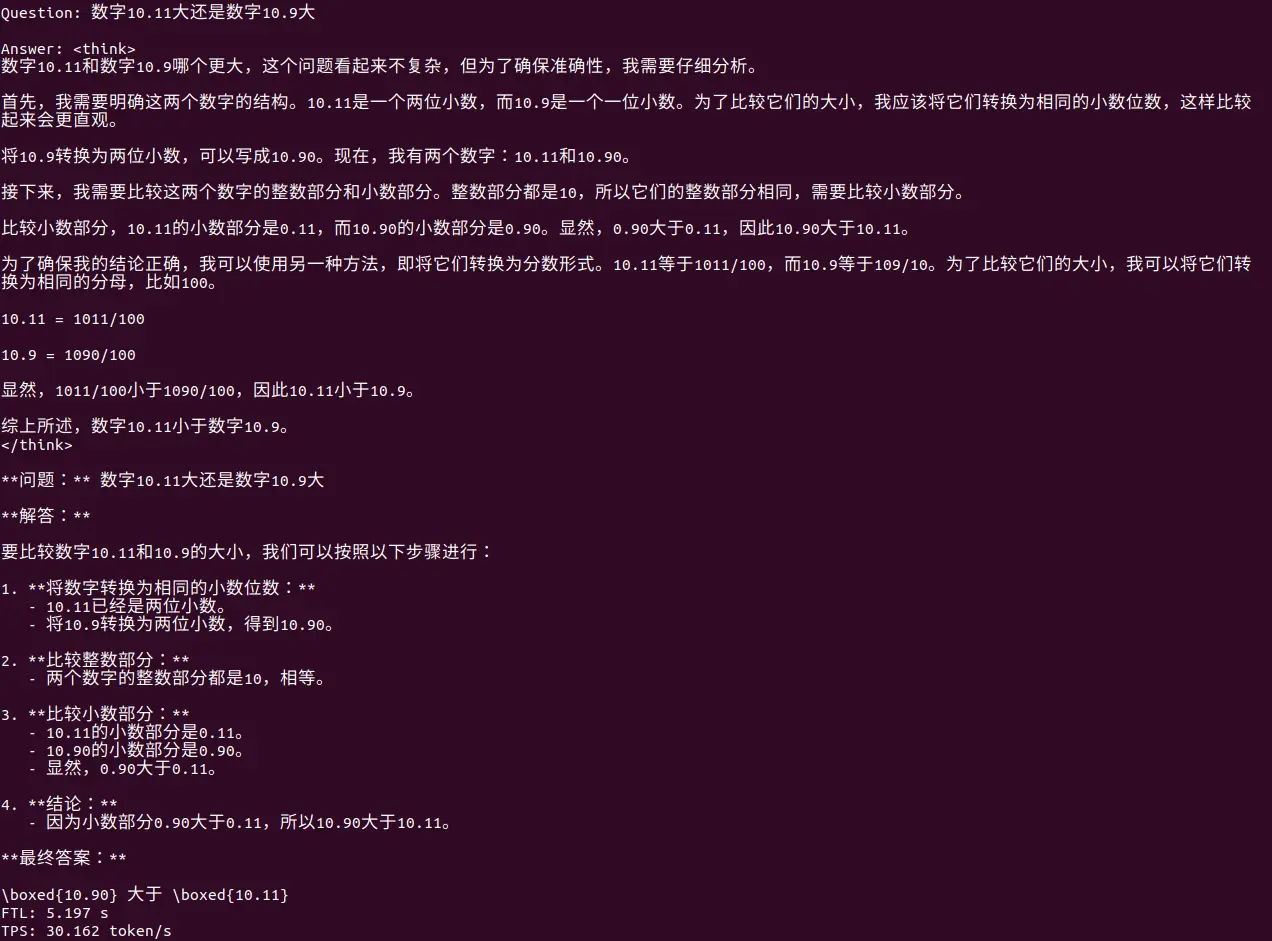

| Model | Quantization | Sequence Length | First Token Latency (s) | Tokens Per Second (tokens/s) |

|---|---|---|---|---|

| deepseek-r1-distill-qwen-1.5b | INT4 | 8192 | 5.159 | 30.448 |

| deepseek-r1-distill-qwen-7b | INT4 | 2048 | 2.843 | 11.008 |