Simulate YOLOv5 Segmentation Inference

This document demonstrates how to use the rknn-toolkit2 to simulate inference of the YOLOv5 segmentation model on an x86 PC without a development board. For the required environment setup, refer to RKNN Installation.

Prepare the Model

This example uses a pretrained ONNX model from the rknn_model_zoo. The model is converted and simulated on the PC.

-

If using

conda, activate therknnconda environment first:X86 Linux PCconda activate rknn -

Download the

yolov5s-seg.onnxmodel:X86 Linux PCcd rknn_model_zoo/examples/yolov5_seg/model

# Download the pretrained yolov5s-seg.onnx model

bash download_model.shtipIf you encounter network issues, visit this page to download the model manually and place it in the corresponding folder.

-

Rename the ONNX model file to

rknnformat (only for PC simulation purposes):X86 Linux PCcp yolov5s-seg.onnx yolov5s-seg.rknn -

(Optional) Convert the ONNX model to

yolov5s-seg.rknnusingrknn-toolkit2:X86 Linux PCcd rknn_model_zoo/examples/yolov5_seg/python

python3 convert.py <onnx_model> <TARGET_PLATFORM> <dtype> <output_rknn_path>

# Example:

# python3 convert.py ../model/yolov5s-seg.onnx rk3588 i8 ../model/yolov5s-seg.rknnParameter explanation:

<onnx_model>: Path to the ONNX model.<TARGET_PLATFORM>: Target NPU platform, such asrk3562,rk3566,rk3568,rk3576,rk3588,rk1808,rv1109,rv1126.<dtype>: Quantization type (i8for int8,fpfor fp16). Default isi8.<output_rknn_path>: Path to save the RKNN model. Defaults to the same directory as the ONNX model with the filenameyolov5s-seg.rknn.

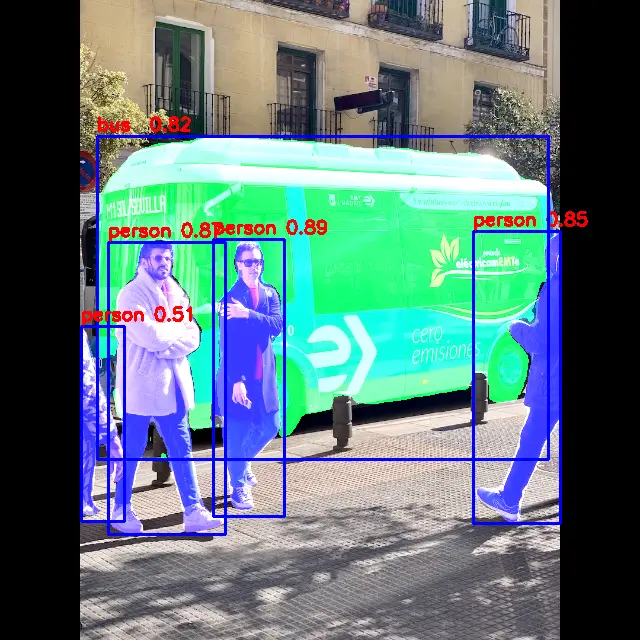

ONNX Model Inference on PC

Run the inference script:

python3 yolov5_seg.py --model_path ../model/yolov5s-seg.onnx --img_show

Example output:

person @ (212 242 285 510) 0.871

person @ (111 240 223 535) 0.850

person @ (472 233 559 519) 0.831

bus @ (97 134 549 458) 0.799

person @ (80 328 125 517) 0.470

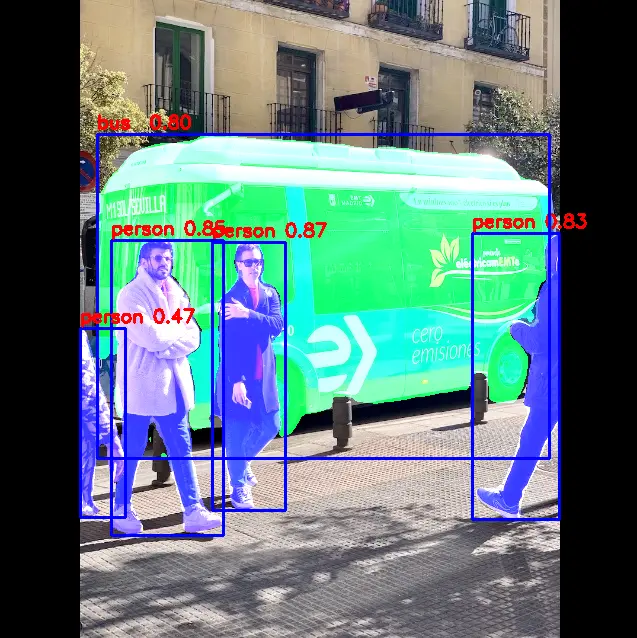

RKNN Model Simulation Inference on PC

-

Install required dependencies via

pip3:X86 Linux PCpip3 install torchvision==0.19.0 pycocotools -

Modify the

rknn_model_zoo/py_utils/rknn_executor.pyfile as follows (make a backup of the original file):Python Codefrom rknn.api import RKNN

class RKNN_model_container():

def __init__(self, model_path, target=None, device_id=None) -> None:

rknn = RKNN()

DATASET_PATH = '../../../datasets/COCO/coco_subset_20.txt'

onnx_model = model_path[:-4] + 'onnx'

rknn.config(mean_values=[[0, 0, 0]], std_values=[[255, 255, 255]], target_platform=target)

rknn.load_onnx(model=onnx_model)

rknn.build(do_quantization=True, dataset=DATASET_PATH)

rknn.init_runtime()

self.rknn = rknn

def run(self, inputs):

if isinstance(inputs, list) or isinstance(inputs, tuple):

pass

else:

inputs = [inputs]

result = self.rknn.inference(inputs=inputs)

return result

def release(self):

self.rknn.release()

self.rknn = None -

Run the simulation inference script:

X86 Linux PCpython3 yolov5_seg.py --target <TARGET_PLATFORM> --model_path <RKNN_MODEL_PATH> --img_show

# Example:

# python3 yolov5_seg.py --target rk3588 --model_path ../model/yolov5s-seg.rknn --img_showParameters:

--target: Specifies the NPU platform for simulation. Options includerk3562,rk3566,rk3568,rk3576,rk3588,rk1808,rv1109,rv1126.--model_path: Path to the RKNN model to simulate.

Example output:

person @ (213 239 284 516) 0.882

person @ (109 240 224 535) 0.869

person @ (473 231 560 523) 0.845

bus @ (97 136 548 459) 0.821

person @ (80 328 124 519) 0.499

- The simulation inference result (only simulates NPU computation; actual performance and accuracy depend on inference on the target board):