Deploy YOLOv8 Object Detection on the Board

This document demonstrates how to run on-board inference of the YOLOv8 object detection model on the RK3588. For the required environment setup, please refer to RKNN Installation.

This example uses a pretrained ONNX model from the rknn_model_zoo as an example to illustrate the complete process of converting the model and performing inference on the board. The target platform for this example is RK3588.

Using RKNN to deploy YOLOv8 involves two steps:

- On a PC, use rknn-toolkit2 to convert models from different frameworks into RKNN format.

- On the board, use rknn-toolkit2-lite's Python API for model inference.

Model Conversion on PC

Radxa has provided a pre-converted yolov8.rknn model. Users can skip this section and refer to YOLOv8 Inference on Board.

-

If using Conda, activate the RKNN environment first:

X86 Linux PCconda activate rknn -

Download the YOLOv8 ONNX model:

X86 Linux PCcd rknn_model_zoo/examples/yolov8/model

# Download the pretrained yolov8n.onnx model

bash download_model.shIf you encounter network issues, you can download the corresponding model manually from this page and place it in the appropriate folder.

-

Convert the ONNX model to the RKNN format using rknn-toolkit2:

X86 Linux PCcd rknn_model_zoo/examples/yolov8/python

python3 convert.py ../model/yolov8n.onnx rk3588Parameter Explanation:

<onnx_model>: Specifies the path to the ONNX model.<TARGET_PLATFORM>: Specifies the NPU platform name. Options includerk3562, rk3566, rk3568, rk3576, rk3588, rk1808, rv1109, rv1126.<dtype> (optional): Specifies the data type asi8(for int8 quantization) orfp(for fp16 quantization). The default isi8.<output_rknn_path> (optional): Specifies the path for saving the RKNN model. By default, it is saved in the same directory as the ONNX model with the filenameyolov8.rknn.

-

Copy the

yolov8n.rknnmodel to the target board.

YOLOv8 Inference on Board

-

Download the

rknn-model-zoo-rk3588package to obtain the YOLOv8 demo (includes the pre-convertedyolov8.rknnmodel):Radxa OSsudo apt install rknn-model-zoo-rk3588If using a CLI version, you can download the package manually from the deb package download page.

-

Run the YOLOv8 example code:

If using a model converted on the PC, copy it to the board and specify the model path using the

--model_pathparameter:Radxa OScd /usr/share/rknn_model_zoo/examples/yolov8/python

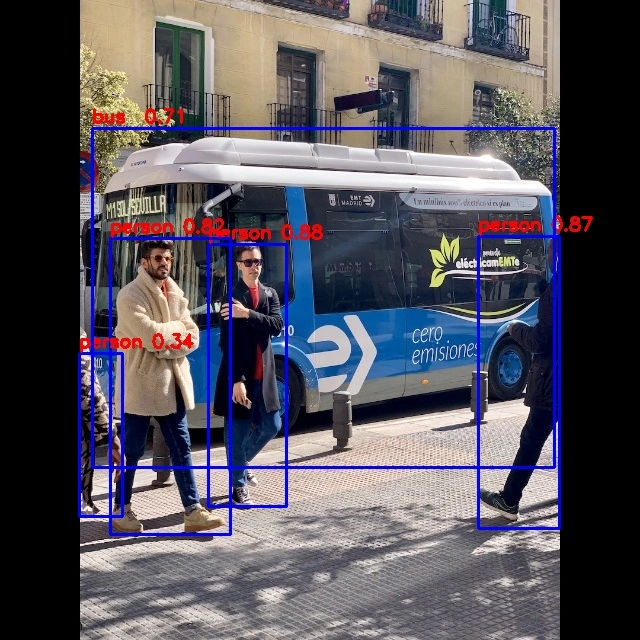

sudo python3 yolov8.py --model_path ../model/yolov8.rknn --img_saveExample output:

Results$ sudo python3 yolov8.py --model_path ../model/yolov8.rknn --img_save

import rknn failed, try to import rknnlite

--> Init runtime environment

I RKNN: [09:01:01.819] RKNN Runtime Information, librknnrt version: 1.6.0

I RKNN: [09:01:01.819] RKNN Driver Information, version: 0.8.2

W RKNN: [09:01:01.819] Current driver version: 0.8.2, recommend to upgrade the driver to version >= 0.8.8

I RKNN: [09:01:01.819] RKNN Model Information, version: 6, target platform: rk3588

W RKNN: [09:01:01.836] Query dynamic range failed. Ret code: RKNN_ERR_MODEL_INVALID.

done

Model-../model/yolov8.rknn is rknn model, starting val

infer 1/1

IMG: bus.jpg

person @ (211 241 282 507) 0.872

person @ (109 235 225 536) 0.860

person @ (477 225 560 522) 0.856

person @ (79 327 116 513) 0.306

bus @ (95 136 549 449) 0.860

Detection result saved to ./result/bus.jpgParameter Explanation:

--model_path: Specifies the RKNN model path.--img_folder: Specifies the folder containing images for inference. Default is../model.--img_save: Whether to save the inference result images to the./resultfolder. Default isFalse.

-

All inference results are saved in the

./resultfolder.